Aditya Kailas Jadhav

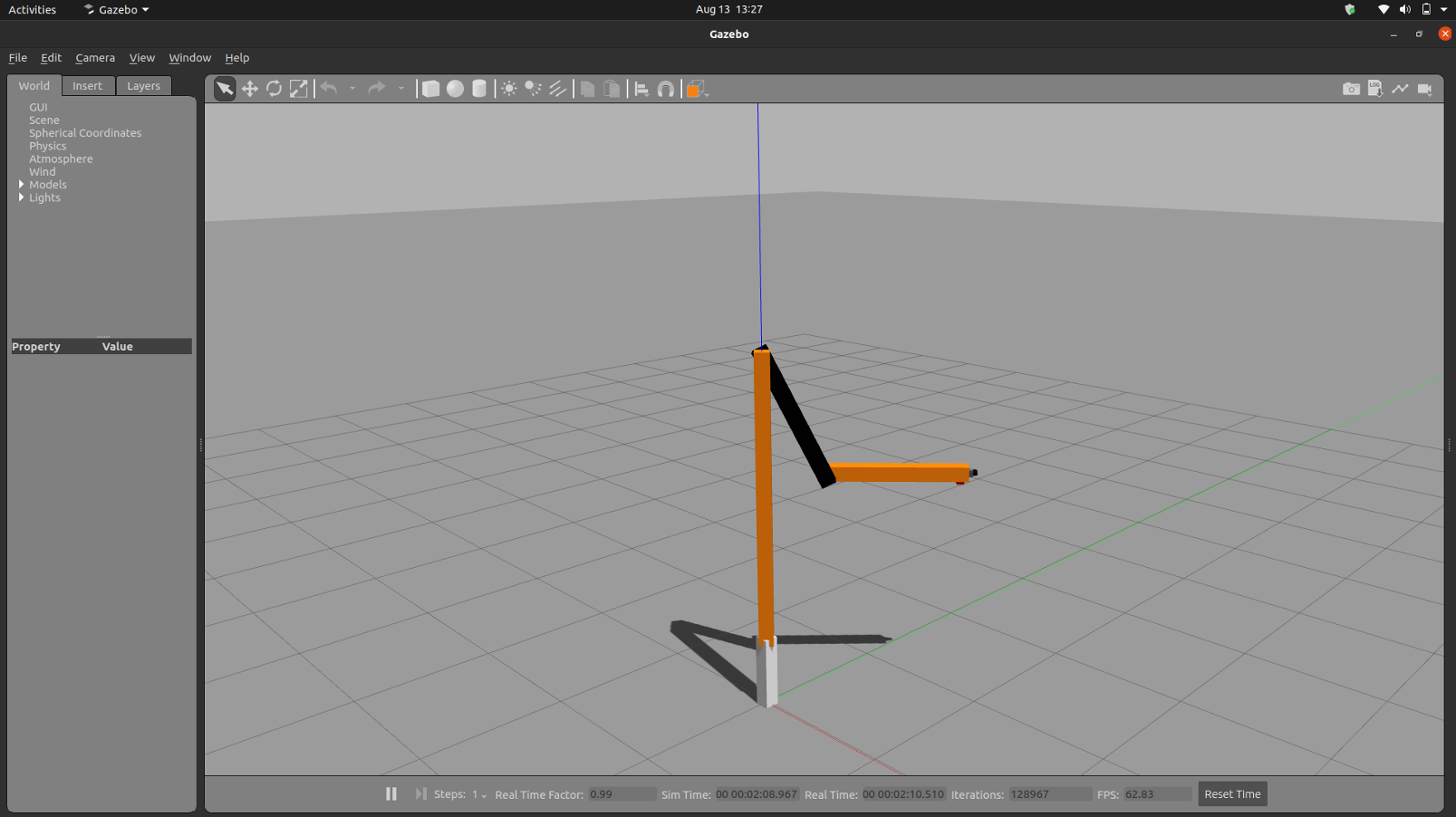

This project involved building a three-degree-of-freedom (3-DOF) robotic manipulator and controlling it within the Gazebo simulator using the Robot Operating System (ROS). The robot was constructed from a URDF file, adding a continuous joint to provide an extra degree of freedom along the z-axis.

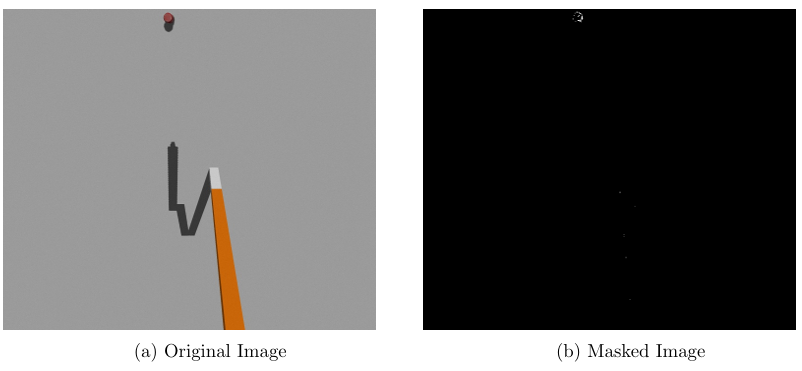

A camera was fixed to the manipulator's tip to detect a coke can placed randomly in the environment. The control script commands the robot to explore its workspace until the object is found. Object detection was achieved using OpenCV color segmentation. An HSV mask was calibrated to isolate the can from the background. When the number of non-zero pixels in the masked image exceeded a threshold of 60, a flag was published on a ROS topic to stop the robot's movement.

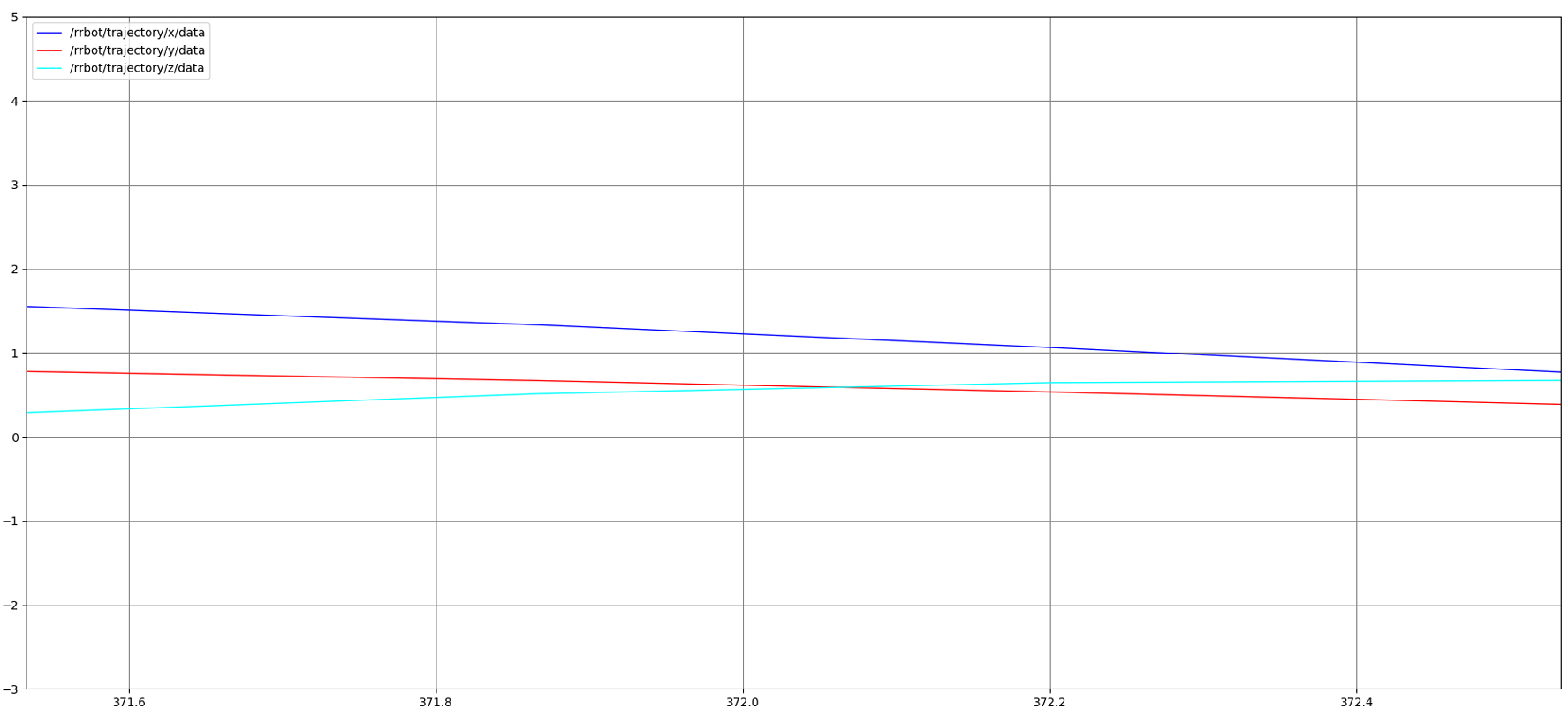

To analyze the robot's movement, forward kinematics equations were implemented to calculate the end-effector's position in x, y, and z coordinates. These coordinates were then published on a ROS topic and plotted using the rqt_gui package, providing a real-time visualization of the robot's trajectory until it successfully located the object.